Installations

Critique

(2018)

Custom software (Node-Red, MaxMSP), computer, two-channel audio playback, HD monitor

Before heading to the movies or attending a concert, our perception of the music we are about to hear or the film we are about to watch is often modified by the negative or positive reviews we read in online blogs or entertainment media. In today's world of instant criticism due to unfettered access to forums devoted to posting our opinions on everything from works of art to artisanal burritos, our experiences are often shaped by previous opinions other than our own. My interest in this emerging social pattern led me to design an installation that condensed this process into an art work that scanned web-based sources of criticism towards a chosen musical work and used the various negative or positive statements about that work to warp and change the way it is presented to an audience, allowing them to only hear the work through the lens of its critics, and not in the original form that its creator intended.

In Critique, participants can select between five musical compositions to listen to, and the software environment will playback an audio recording of their selection. As the recording plays, a review of a live performance of the work is displayed onscreen, and the audio file will be modulated and changed according to an analysis of the emotional intensity of the author’s words. The environment utilizes the Node-Red programming tool to source text from specific web pages, prepare it for sentiment analysis, communicate with the IBM Watson sentiment analysis service, and to parse the resulting data for use by the audio effects.

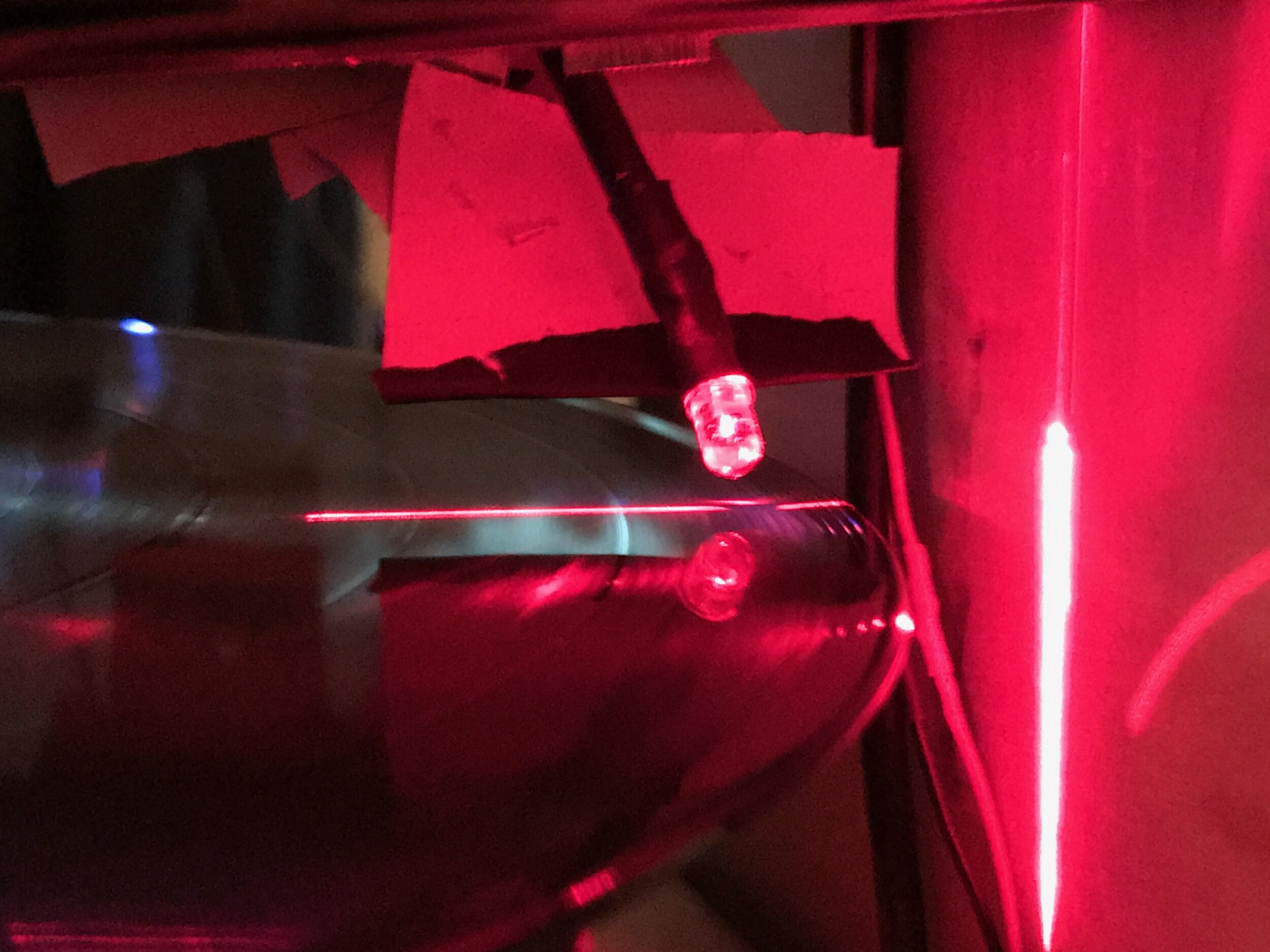

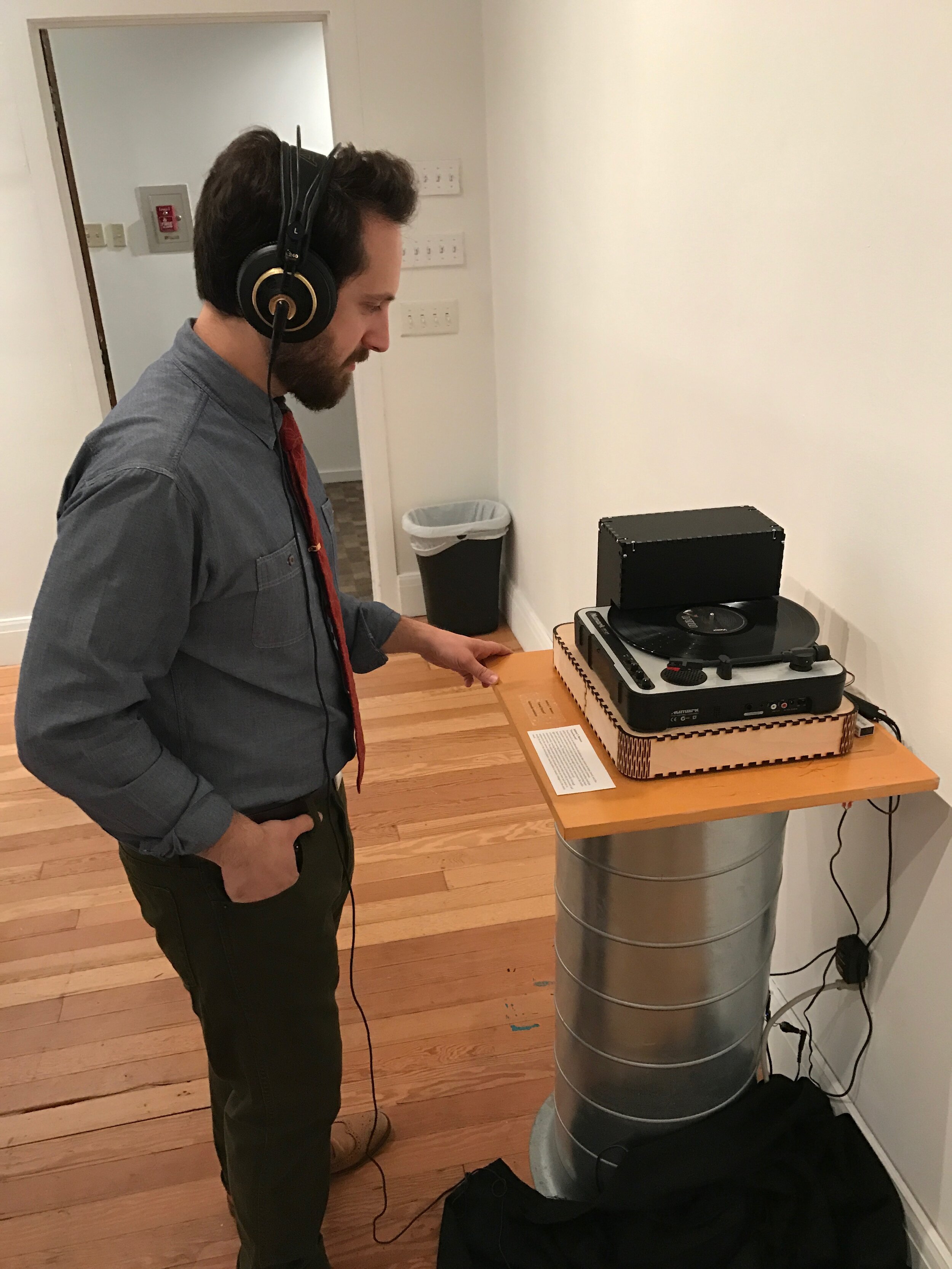

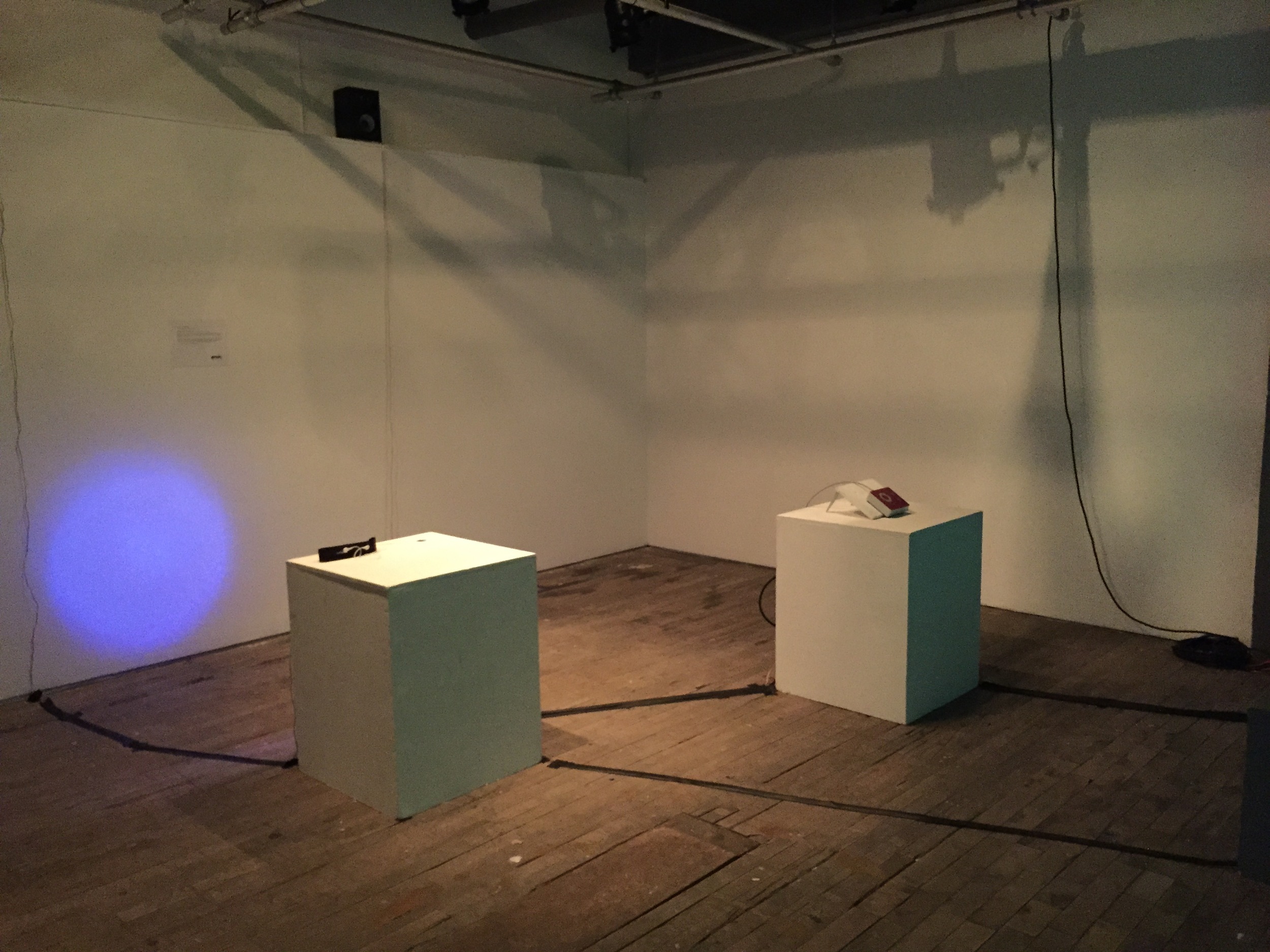

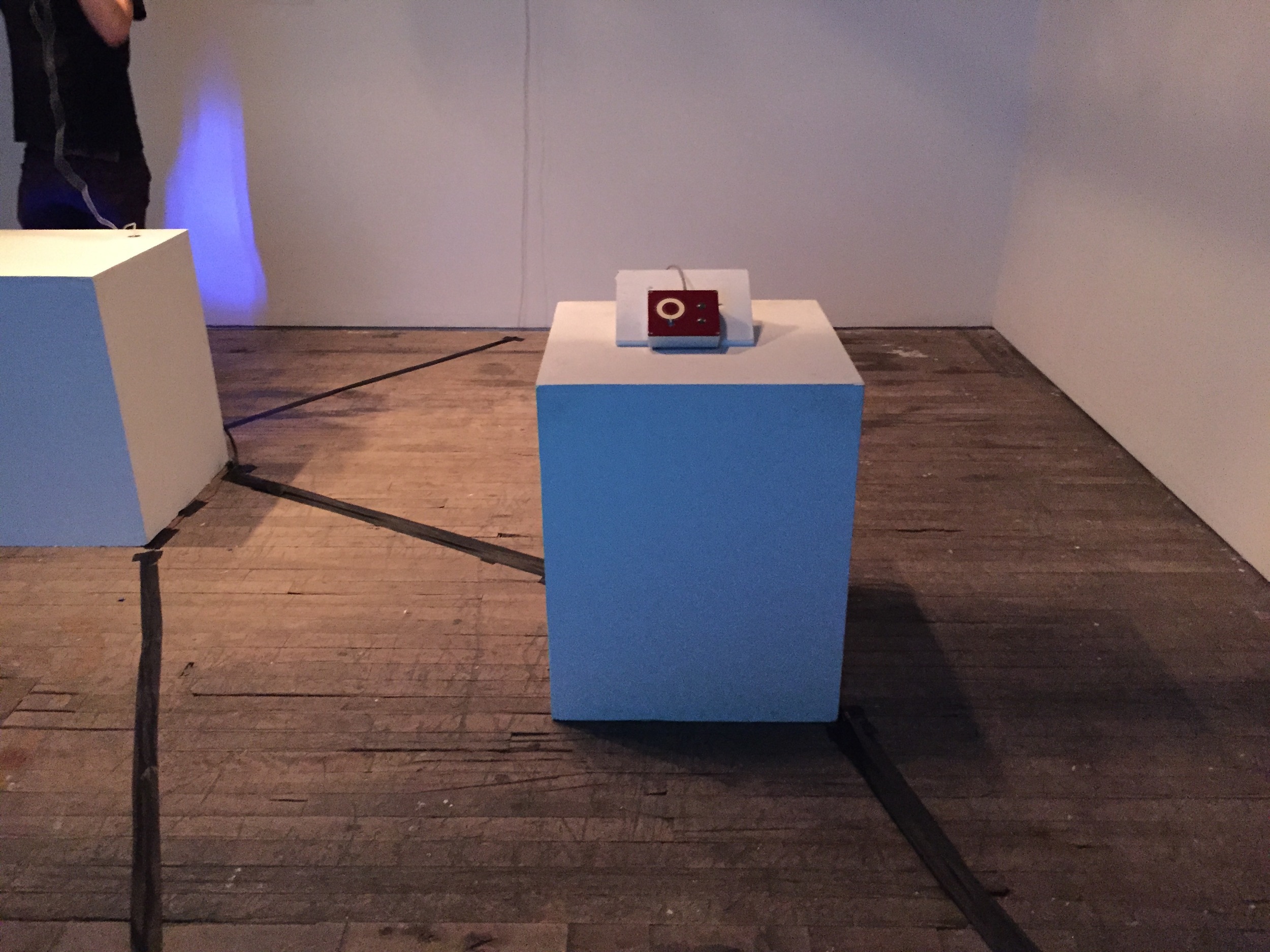

Travel Record

(2017)

Laser-read record player, custom software (MaxMSP), Arduino, Lite2Sound, computer, two-channel audio, fabricated enclosure

Travel Record is a multimedia installation that centers on loops, travel, and the ability to find beautiful rewards in unconventional and complex systems.

A computer-controlled record player generates sonic material by reading multiple bands of grooves on a vinyl record at once through the process of translating laser light reflection into audio. Through this process, the listener experiences the audio of multiple parallel-moving portions of songs etched into each record simultaneously, creating formless ambient textures out of traditionally-through composed music. The audio material generated is then both sonically processed and analyzed by the custom software, which will make decisions on how and when to modify the speed of the record player, causing subtle or drastic changes to the sonic result.

All technology used in this installation was designed by the composer, except for the Lite2Sound device, which was designed by Eric Archer.

The installation version of this work was premiered on April 4th, 2017, Firehouse Gallery, Baton Rouge, LA

A live performance version of this work was premiered at the Old State Capital in Baton Rouge as part of the High Voltage Concert series in April, 2017.

John Cage’s Four4 for Robotic Percussion

(2017)

Arrangement for solenoids, motors, fabricated enclosures, and Arduino

John Cage's Four4 arranged for robot percussion by the EMDM Ensemble at LSU. A collaboration with Eric Sheffield, Landon Viator, and Brian Elizando.

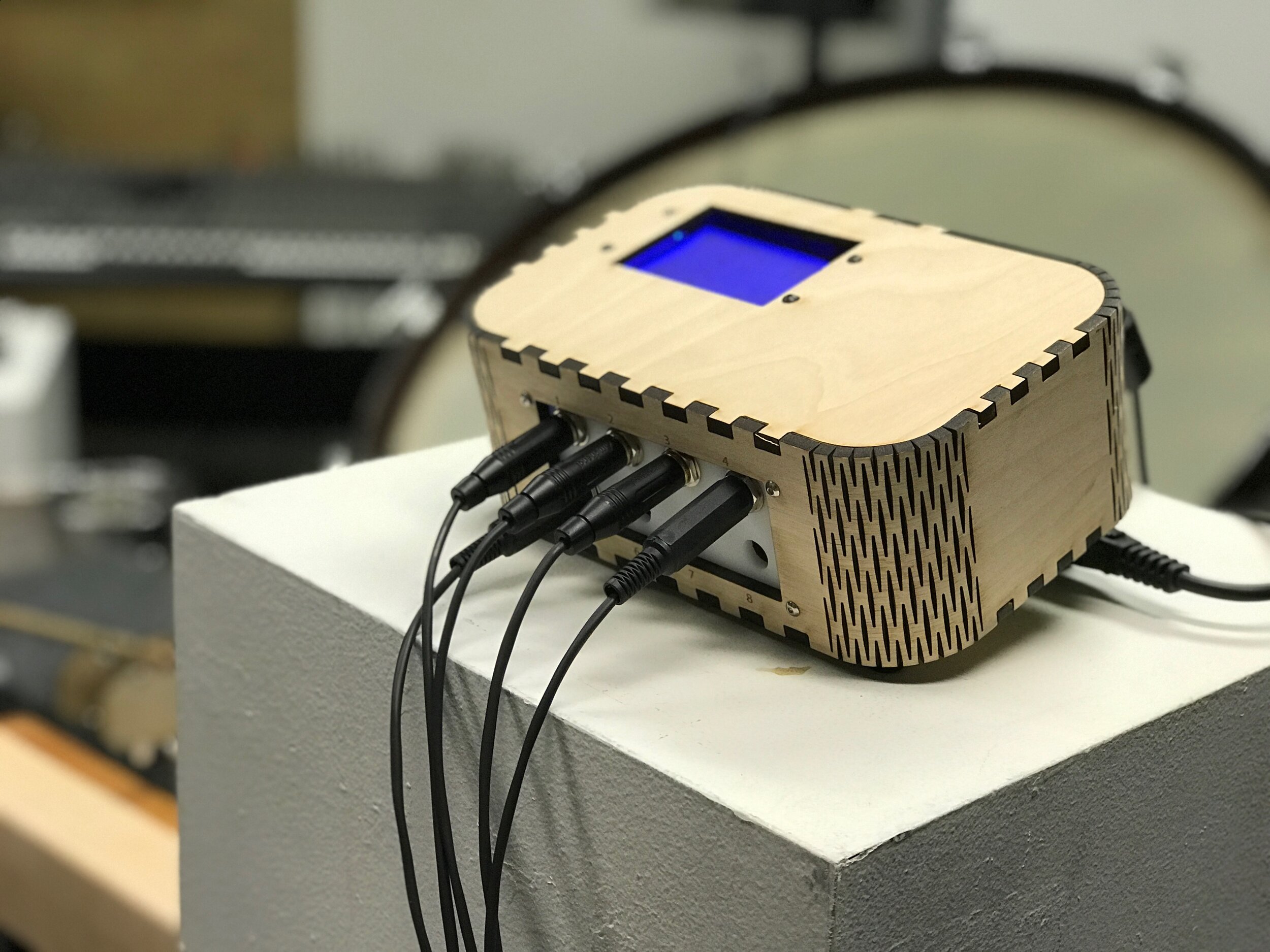

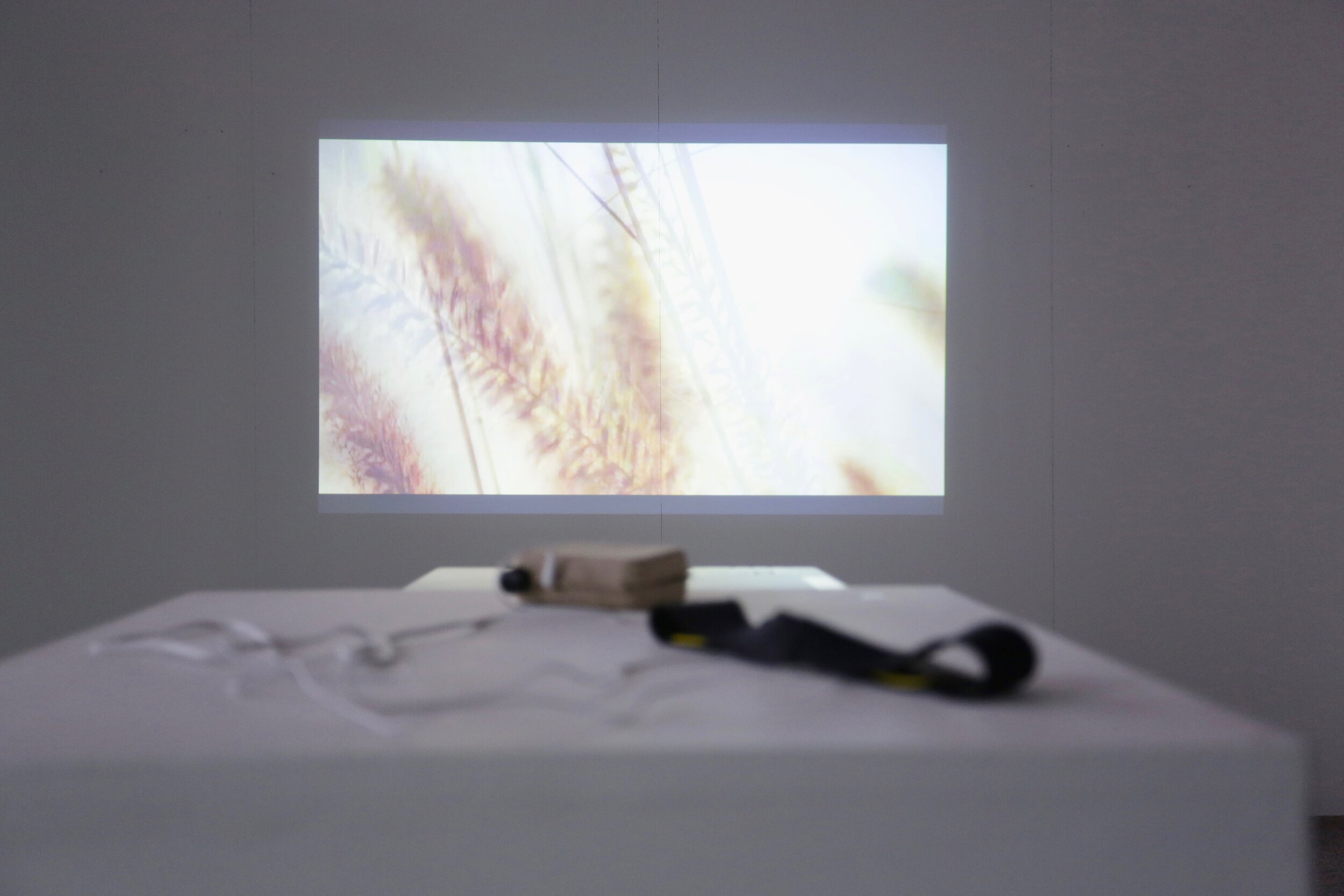

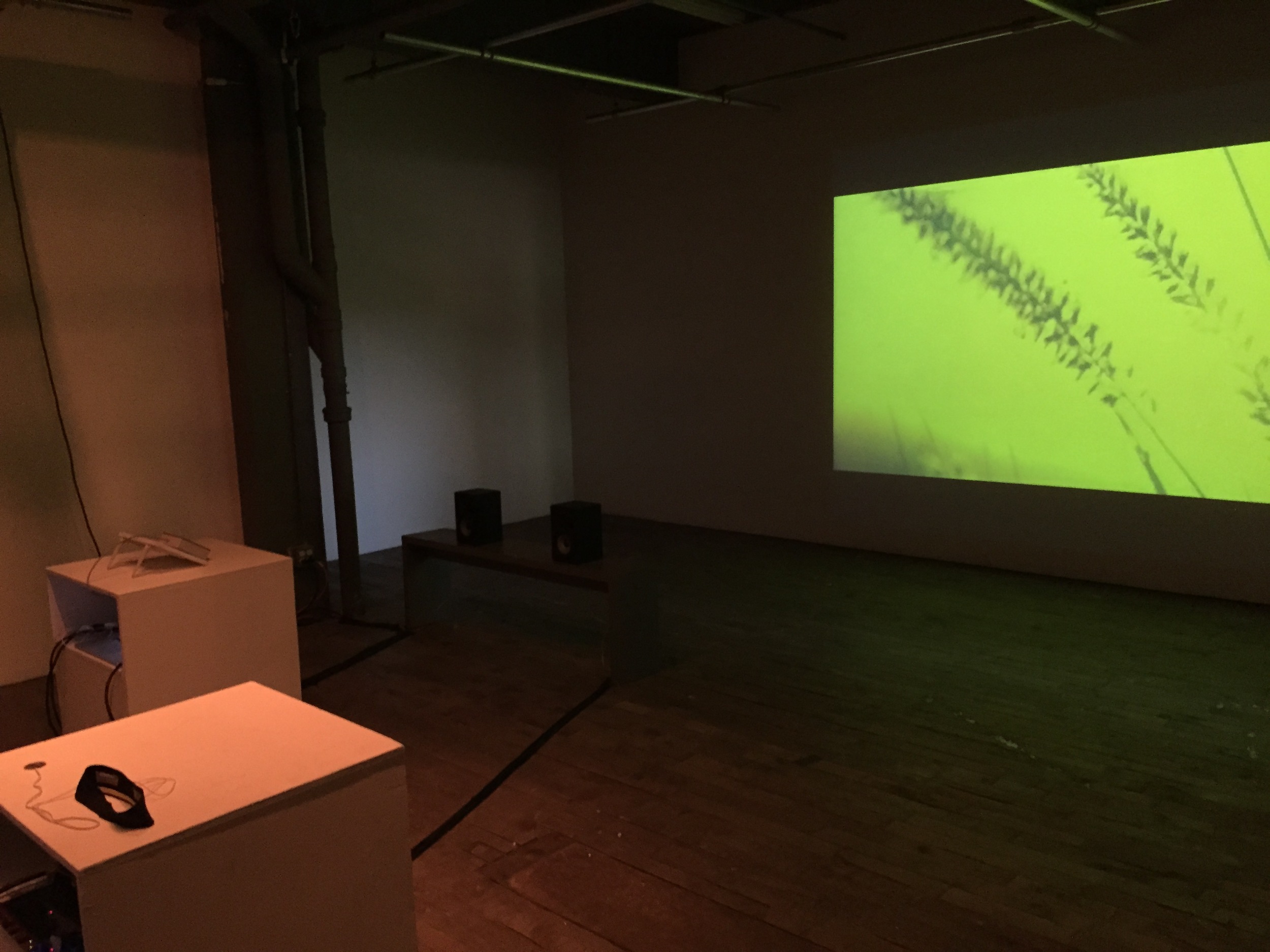

For the Man Who Has Everything

(2015)

MIDI Sprout biorhythmic sensor, tactile sensors, fabricated enclosures, four-channel sound, video, custom software

How do our interactions with other people resonate in our minds? Can strangers or loved ones accurately understand the intent of your actions towards them when outside circumstances often warp their meaning? How do we gauge the effectiveness of our own actions, and can we be sure that we are helping someone if we do not know how they interpret the concept of “help”?

This installation provides two stations of interaction: one that is passive, and one that is active. Wearing the headband creates a stream of polyphonic MIDI data to be analyzed by the custom Max/MSP patch, resulting in a unique video and audio environment generated in realtime. Interacting with the tactile sensor box alters the analysis parameters of the MIDI data stream, affecting the video stream witnessed by the headband wearing participant. The amount of time spent interacting with either station will yield different levels of reactions from the generative software, and when working in tandem with someone else, each person's actions (either passive or active) will affect the other’s, resulting in an ever changing audiovisual environment.

All technology used in this installation was designed by the composer, except for the MIDI Sprout biorhythmic sensor, which was designed by Sam Cusamono.

This installation was supported in part by Signal Culture.

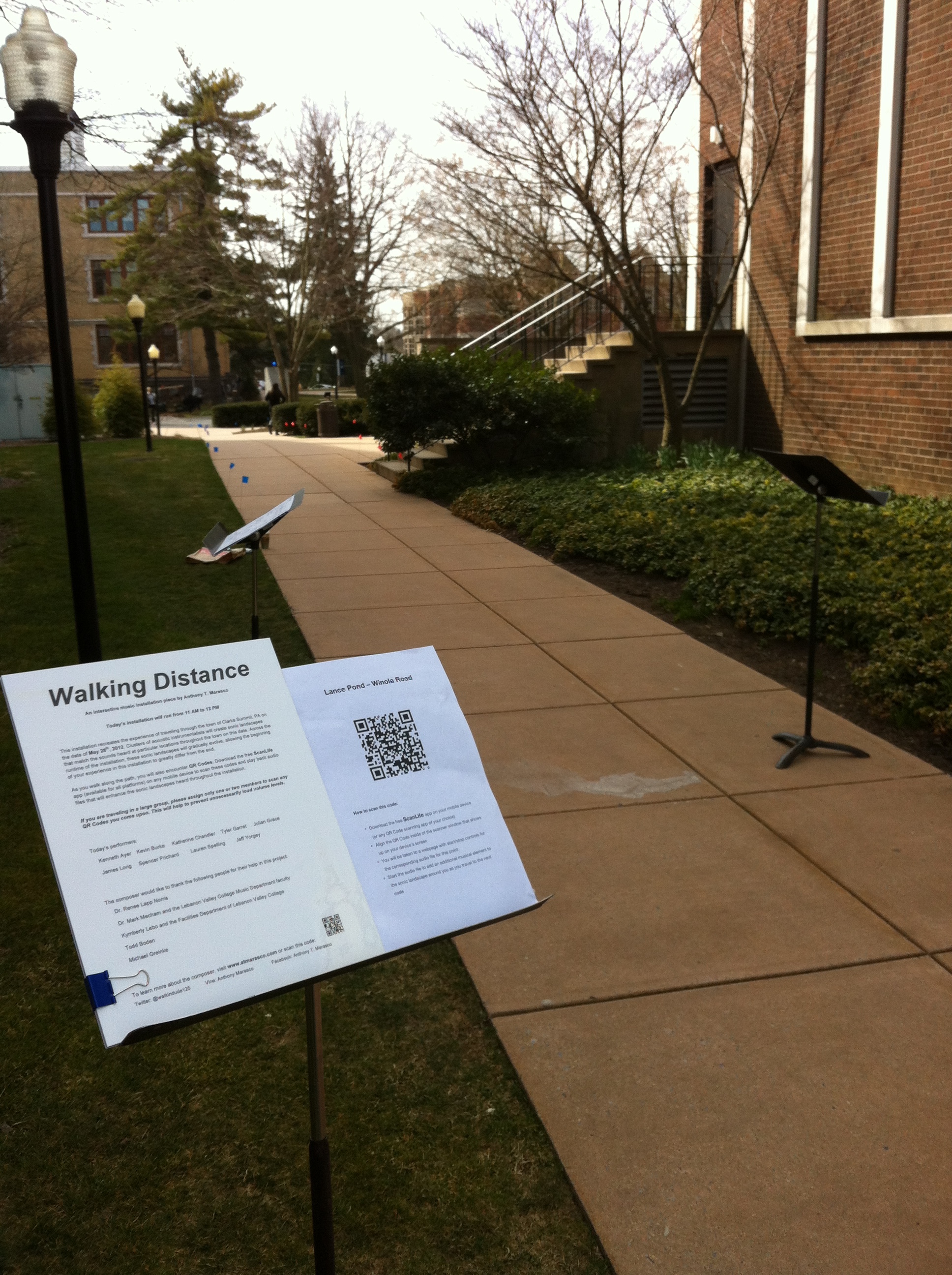

Walking Distance

(2013)

open, acoustic instrumentation and audience-provided electronics

Walking Distance is an interactive, musical installation piece that strives to recreate and reinterpret various sonic atmospheres that were captured across the town of Clarks Summit, Pennsylvania on a handful of specific dates. Audience members travel at their own leisure through a pre-set walking path that emulates the various streets, landmarks, and locales of the town and pass by “clusters” of performers stationed at various waypoints along the path.

While traveling the path, audience members with smartphones are encouraged to scan various QR codes that are placed at select intersections and waypoints. These QR codes link to streaming audio files that—when played back through the audience members’ phones—will add an additional sonic element to their experience of the installation and create unique textural and tonal combinations with the music created by whichever ensemble members they happen to be near.

Members of the performance ensemble are encouraged to change their location throughout the duration of the piece, allowing them the opportunity to perform and experience a multitude of musical and sonic material throughout the installation. These variables provide the ensemble with a piece that can be performed with an extremely flexible duration and instrumentation, and will never sound exactly the same across multiple performances.

The world premiere took place on April 4th, 2013 at the Lebanon Valley College in Annville, PA.

Laying of Hands

(2013)

live video processing/mixing, computer (live audio processing), pictures, letters, portable tape recorders, iPad (Synthesizer - Thicket app), melodica, and electric guitar

Laying of Hands is an interactive installation piece centered on physical objects, images, and sonic landscapes that invoke a feeling of nostalgia, family, and history. While the piece is occurring, audience members file into the room and interact with photographs, letters, and tape recorders/players that are located on tables stationed around the parameter of the room. Microphones are placed near the surface of these tables to pick up the sounds of letters and photographs being shuffled, bent and flapped, while audio and mechanical noise from the tape players are routed through a small mixer. These ambient sounds are then processed by custom-made software running on a laptop.

While the audience members interact with the items placed on the tables, the melodica, electric guitar, and iPad (Thicket app) performers freely improvise—within predetermined tonal constructs—based off of phrases, pictures, and musical fragments located on the included set of Performance Cards.

The world premiere took place on March 21st, 2013 at the Hope Horn Gallery of the University of Scranton in Scranton, PA.

Additional Videos

Screen shot of Out of Conte#t interactive page, featured in "The Connective" issue of WIRED Magazine's Digital Issue

Out of Conte#t

(2013)

an interactive piece for live Twitter data and hashtag-assigned musical material

Commissioned by WIRED Magazine and published in the June 2013 issue of The Connective, a digital-only special issue. Available for download in the WIRED Magazine Hub for tablet devices (iPads, Nooks, Android devices). Click here for details on how to download this issue.

Out of Conte#t allows users to create their own unique sonic landscape by interacting with hash tags outside of a tweet-based context, much as we are already becoming a custom to doing by adding them into everyday conversation online or in person.

The ten hash tags listed on the feature's page were selected from the comment thread of a recent Facebook post which asked my friends to list their favorite hash tags that they use in everyday conversation and text messages, not just on Twitter. I then used the SoundPlant software program (developed by Marcel Blum) to map fragments of sound (bouncy synth notes, drones, metal mixing bowl percussion, warped fragments of ambient sound, etc.) to the letters on my QWERTY keyboard, turning it into a digital orchestra of electronic sounds. By typing out the words that comprise each hash tag, I was able to compose and record individual lines of melody that are then layered on top of the background music whenever the digital app detects a tweet that includes the corresponding hashtag.